Depth-Visual-Inertial (DVI) Mapping for Robust Indoor 3D Reconstruction

Abstract

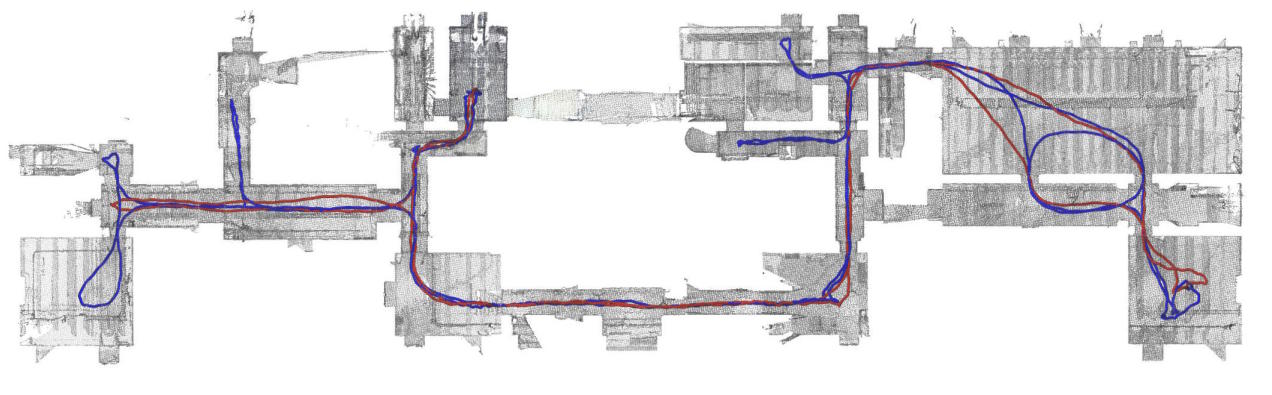

We propose the Depth-Visual-Inertial (DVI) mapping system: a robust multi-sensor fusion framework for dense 3D mapping using time-of-flight cameras equipped with RGB and IMU sensors. Inspired by recent developments in real-time LiDAR-based odometry and mapping, our system uses an error-state iterative Kalman filter for state estimation: it processes the inertial sensor’s data for state propagation, followed by a state update first using visual-inertial odometry, then depth-based odometry. This sensor fusion scheme makes our system robust to degenerate scenarios (e.g. lack of visual or geometrical features, fast rotations) and to noisy sensor data, like those that can be obtained with off-the-shelf time-of-flight DVI sensors. For evaluation, we propose the new Bunker DVI Dataset, featuring data from multiple DVI sensors recorded in challenging conditions reflecting search-and-rescue operations. We show the superior robustness and precision of our method against previous work. Following the open science principle, we make both our source code and dataset publicly available.

Cite this work

Select your preferred citation format:

Loading citation...

Acknowledgments

This work was funded in part by Belgium’s Royal Higher Institute for Defence (RHID) under Grant DAP18/04 and Grant DAP22/01.

Previous Article

Synthetic generation of GC-IMS records based on AutoencodersNext Article

4DPL Participates in MASCAL exercise